In December 2024, Google Deepmind released the Gemini 2.0 Flash model and their first-ever low-latency, audio-first "Live API". While our models were advancing there was no easy on-ramp for web developers, and I knew there were some hard parts that needed to be solved to reduce the barrier. The Live API Web Console aimed to solve those hard parts.

Along with designer Hana Tanimura and creative director Alexander Chen I began to build what became Gemini's 2nd-most popular github repository.

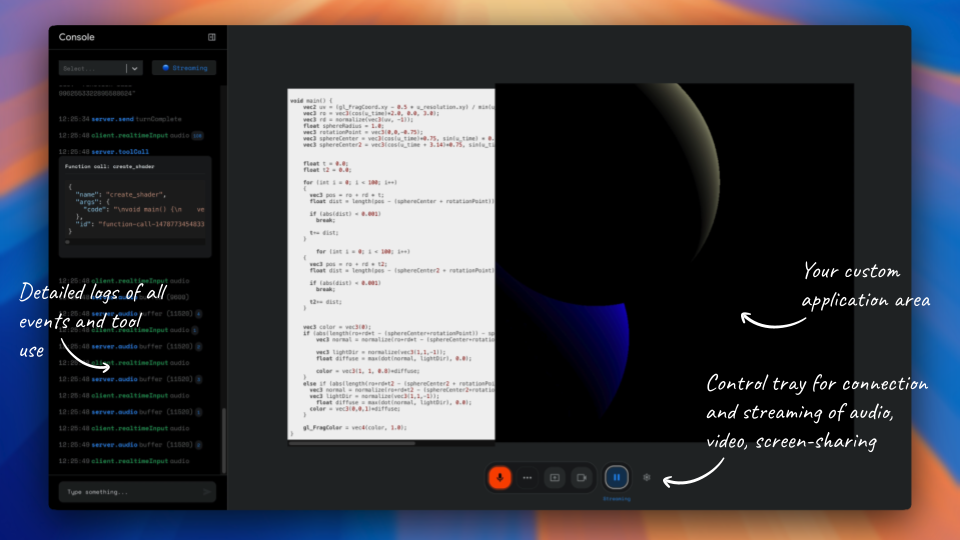

The Gemini Live Web Console is a starter project, written in react + typescript that manages the websocket connection, worklet-based audio processing, logging and streaming of your webcam or screen sharing.

The control tray shows your websocket connection status, your microphone activity, the models audio activity as well as controls to share your webcam or your screen.

The control tray shows your websocket connection status, your microphone activity, the models audio activity as well as controls to share your webcam or your screen.

The logs view shows you details of all of the different messages going into and coming out of the model

The logs view shows you details of all of the different messages going into and coming out of the model

Since the initial launch of this project it has had an outsized impact. It has been used in Gemini Robotics to showcase embodied reasoning, as well as featured several times at Google IO 2025; including demos in the developer keynote, pre-show and AI sandbox. It is often found at the forefront of where AI finds personality.

Supporting Materials

- Github: @google-gemini/live-api-web-console

- AI Studio: Native Audio Function Call Sandbox - official product template for building your own

- Google IO Developer's Keynote (May, 2025)

- Google - The Keyword: Google introduces Gemini 2.0: A new AI model for the agentic era

- Youtube: Building with Gemini 2.0: Native Tool Use

- Social

- John Maeda's SXSW 2025 Design in Tech Report: How AI will turn Designers into "Autodesigners" featured multiple demonstrations [(18:19) | [(49:14)]

- Jeff Dean at AGI House presenting a demo on top of the console

- On I/O Main Stage and vibe coding with p5js by Trudy Painter Google IO

- 3D Avatars - icosahedron Alexander Chen

very cool! when people use LLMs like this repeatedly and with very low latencies like it's some kind of free, persistent, almost disposable resource it gives me the "feel the AGI" feels. — Andrej Karpathy (@karpathy) January 10, 2025